20+ word2vec embedding python

Feature Engineering. Reshape the context_embedding to perform a dot product with target_embedding and return the flattened result.

Example For Skip Gram Model In Word2vec Download Scientific Diagram

April 07 2020 at 320 pm The Information will help a lot.

. Word Embedding word2vec 152. Im using Gensim if it matters. Word Embedding with Global Vectors GloVe 156.

It was developed by Tomas Mikolov et al. Im using the word2vec embedding as a basis for finding distances between sentences and the documents. Python Reverse each word in a sentence.

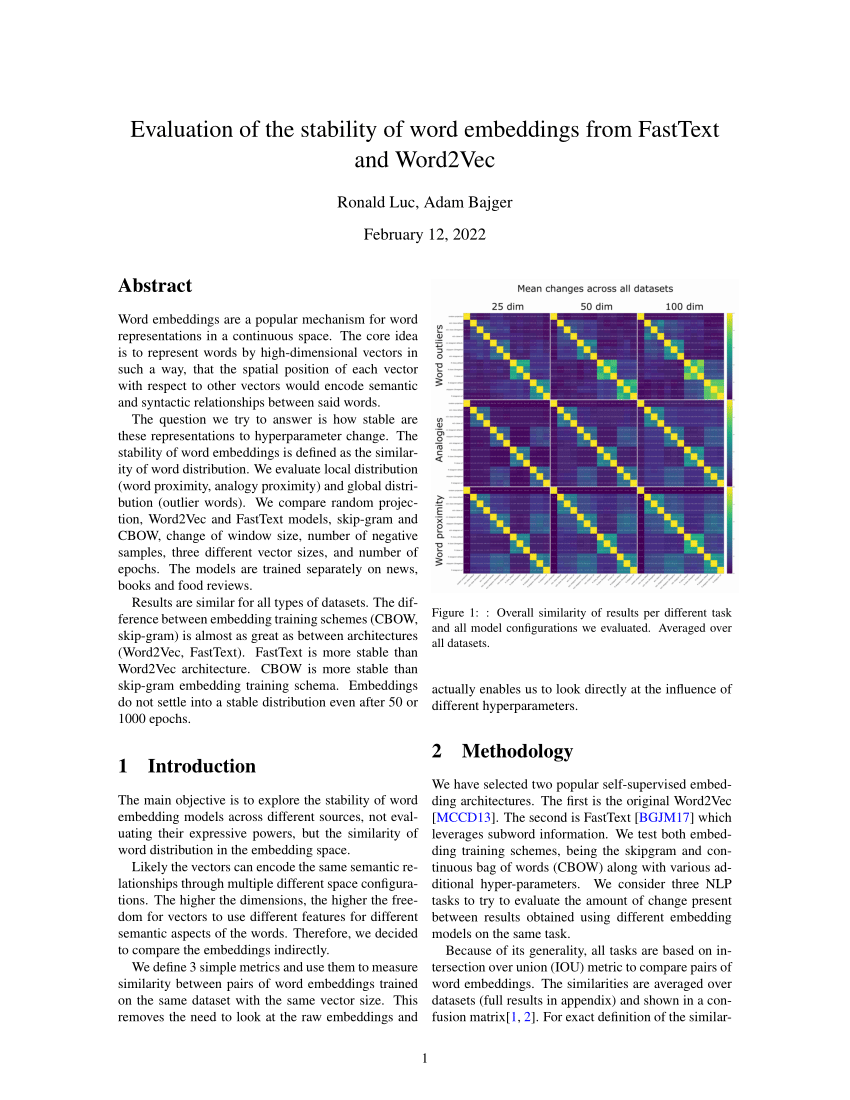

In 2013 Google announced word2vec a group of related models that are used to produce word embeddings. Word embedding is a representation of a word as a numeric vector. Word Embedding is a language modeling technique used for mapping words to vectors of real numbers.

It represents words or phrases in vector space with several dimensions. Pre-trained Word embedding using Glove in NLP models. Word2Vec is a statistical method for efficiently learning a standalone word embedding from a text corpus.

在transformer当中是不是给出一句话直接查找对应的word2vec的300维向量作为embedding即可做一下类似于fine tune吗 python连接Elasticsearch8x 飞鸟真人. See Tomas Mikolov Kai Chen Greg Corrado and Jeffrey Dean. Gensims Word2Vec implementation lets you train your own word embedding model for a given corpus.

So for this problem Word2Vec came into existence. In particular I will go through. Word2vec is an approach to create word embeddings.

Im using a size of 240. July 30 2020 at. Learn paragraph and document embeddings via the distributed memory and distributed bag of words models from Quoc Le and Tomas Mikolov.

Bz2 gz and text filesAny file not ending. What is Word Embedding. Bidirectional Encoder Representations from Transformers BERT 159.

Python найчастіше вживане прочитання Пайтон запозичено назву з британського шоу Монті Пайтон інтерпретована обєктно-орієнтована мова програмування високого рівня зі строгою динамічною типізацією. Lets implement our own skip-gram model in Python by deriving the backpropagation equations of our neural network. The directory must only contain files that can be read by gensimmodelsword2vecLineSentence.

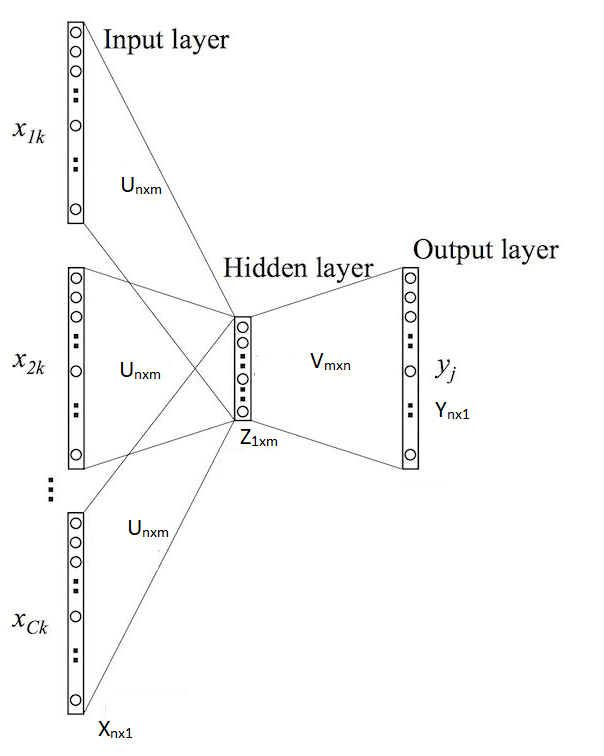

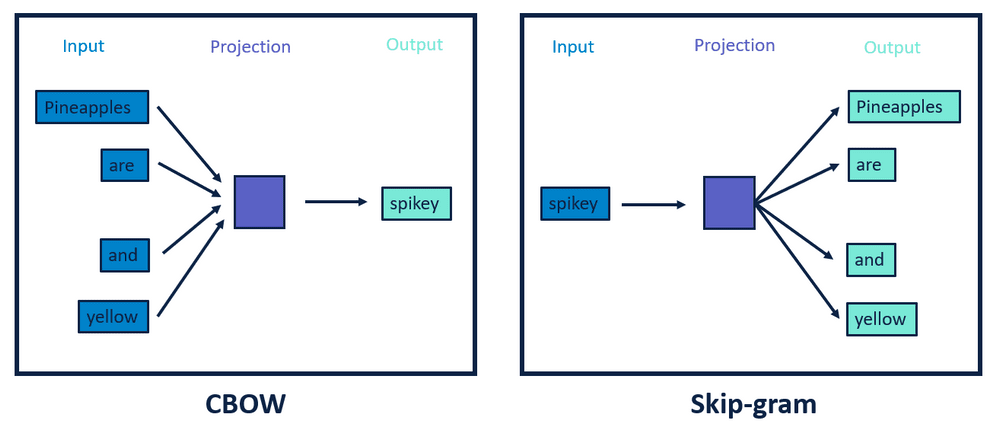

They are a key breakthrough that has led to great performance of neural network models on a suite. Import packages read data Preprocessing Partitioning. In skip-gram architecture of word2vec the input is the center word and the predictions.

The algorithms use either hierarchical softmax or negative sampling. Python Word Embedding using Word2Vec. Class gensimmodelsword2vecPathLineSentences source max_sentence_length10000 limitNone.

The Dataset for Pretraining Word Embeddings. Word2Vec is trained on the Google News dataset about 100 billion words. It is a language modeling and feature learning technique to map words into vectors of real numbers using neural networks probabilistic models or dimension reduction on the word co-occurrence matrix.

Text Cleaning and Pre-processing In Natural Language Processing NLP most of the text and documents contain many words that are redundant for text classification such as stopwords miss-spellings slangs and etc. Chinese Word Vectors 中文词向量. At Google in 2013 as a response to make the neural-network-based training of the embedding more efficient and since then has become the de facto standard for developing pre-trained word embedding.

Word2Vec is one of the most popular pretrained word embeddings developed by Google. From gensimmodelsword2vec import Word2Vec from multiprocessing import cpu_count import gensimdownloader as api Download dataset dataset apiloadtext8 data d for d in dataset Split the data into 2 parts. Word Embedding is a word representation type that allows machine learning algorithms to understand words with similar meanings.

Working with Word2Vec in Gensim is the easiest option for beginners due to its high-level API for training your own CBOW and SKip-Gram model or running a pre-trained word2vec model. In this part we discuss two primary methods of text feature extractions- word embedding and weighted word. It is a neural network structure to generate word embedding by training the model on a supervised classification problem used to measure semantic.

I have a collection of 200 000 documents averaging about 20 pages in length each covering a vocabulary of most of the English language. Object Like LineSentence but process all files in a directory in alphabetical order by filename. Python is a high-level general-purpose programming languageIts design philosophy emphasizes code readability with the use of significant indentation.

Python - Print the last word in a sentence. Word Similarity and Analogy. This project provides 100 Chinese Word Vectors embeddings trained with different representations dense and sparse context features word ngram character and more and corporaOne can easily obtain pre-trained vectors with different properties and use them for downstream tasks.

Googles Word2vec Pretrained Word Embedding. Except for word2vec there exist other methods to create word embeddings such as fastText GloVe ELMO BERT GPT-2 etc. TransE算法Translating Embedding elegantandhh.

You could also use a concatenation of both embeddings as the final word2vec embedding. Word embeddings are a technique for representing text where different words with similar meaning have a similar real-valued vector representation. Word2Vec consists of models for generating word embedding.

If you are not familiar with the concept of word embeddings below are the links to several great resources. Gensim is an open-source python library for natural language processing. Develop a Deep Learning Model to Automatically Classify Movie Reviews as Positive or Negative in Python with Keras Step-by-Step.

The Dataset for. Word embeddings can be generated using various methods like neural networks co-occurrence matrix probabilistic models etc. Word2Vec using Gensim Library.

Fitting a Word2Vec with gensim Feature Engineering Deep Learning with tensorflowkeras Testing. Overview of Word Embedding using Embeddings from Language Models ELMo 16 Mar 21. Distributed Representations of Sentences and Documents.

The target_embedding and context_embedding layers can be shared as well. Python is dynamically-typed and garbage-collectedIt supports multiple programming paradigms including structured particularly procedural object-oriented and functional programmingIt is often described as a batteries.

Clusters Of Semantically Similar Words Emerge When The Word2vec Vectors Download Scientific Diagram

Illustration Of Data Parallelism With Word2vec Download Scientific Diagram

Bidimensional Representation Of Tokens Generated By Word2vec Pca The Download Scientific Diagram

Most Popular Word Embedding Techniques In Nlp

Different Embeddings Word2vec And Glove Versus Macro 20newsgroup And Download Scientific Diagram

T Sne Plot Of 500 Loinc Embeddings Trained Via Word2vec E Dimension Download Scientific Diagram

Most Popular Word Embedding Techniques In Nlp

Different Embeddings Word2vec And Glove Versus Macro 20newsgroup And Download Scientific Diagram

Most Popular Word Embedding Techniques In Nlp

The Skip Gram Model Words Context Model

Pdf Evaluation Of The Stability Of Word Embeddings From Fasttext And Word2vec

A Taxonomy Of Word Embeddings Download Scientific Diagram

In The Word Embedding Space The Analogy Pairs Exhibit Interesting Download Scientific Diagram

Word2vec Cbow Representation Of Words Are Plotted In This Graph Download Scientific Diagram

Most Popular Word Embedding Techniques In Nlp

Example Of Combining Word2vec With Tfpos Idf Download Scientific Diagram

Different Embeddings Word2vec And Glove Versus Macro 20newsgroup And Download Scientific Diagram